"AI Is Rotting Your Brain" And Other Obvious Nonsense

Viral Tech Panic

So I saw a thread going around X, The Everything App™ where a Very Smart Startup Guy breathlessly claims a new MIT study proves ChatGPT is causing “measurable brain damage.” It’s got all the clickbait one could expect in this context: scary brain scans, productivity jargon, talk of “cognitive debt,” and a nice pitch for how you too can be “strategic” about AI—just like him. Also check out his company!

Thanks for reading! I'm Alex, COO at ColdIQ. Built a $4.5M ARR business in under 2 years. Started with two founders doing everything. Now we're a remote team across 10 countries, helping 200+ businesses scale through outbound systems.

—the last tweet in the thread (read: this is just marketing)

This is just more pandering to luddite panic, dressed up with EEG graphs and academic branding. Even the actual MIT study is mostly just a high-tech way of saying, “people who don’t work aren’t working.”

Groundbreaking.

The MIT Study

So what did the study actually find? Buckle up! As it turns out, when you use ChatGPT to “do your writing,” your brain is less engaged.

If you stop writing essays yourself, you get a little worse at writing essays! People relying on AI for essays remember less about what they “wrote,” and feel less ownership!

It’s like saying, “If you use a calculator for all your math, your long division gets rusty.”

Or, “If you only ever drive automatic, you forget how to work a clutch.”

Or, “If you save people into your contacts, you don’t memorize phone numbers.”

Stop the presses.

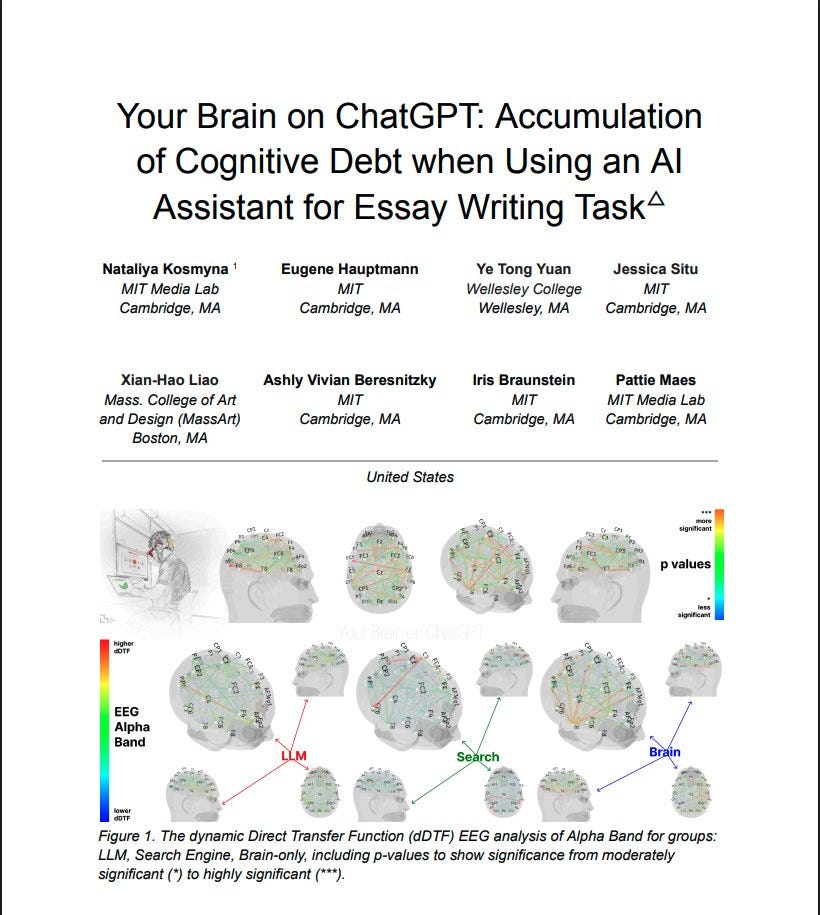

But a bigger problem is that the “measurable brain damage” Alex Vacca refers to amounts to “fewer alpha/beta waves on an EEG.” Which… doesn’t mean that. The study isn’t claiming “damage”

A few observations that make the study itself not exactly “proof” of anything:

The study employs a tiny, practiced sample: 54 people at MIT, all regular essay writers. Most people using ChatGPT are not writing essays repeatedly for months, so this really isn’t an actual real-world AI use case.

The EEG stuff measures “engagement,” not “decline” or “damage.” The MIT study doesn’t present EEG readings as evidence of lasting harm, either. Take away the tool, you’re rusty… Okay? Big deal. Sometimes it’s good to automate what you don’t need to remember. Not all “offloading” is bad!

This isn’t to say this is a useless study; documenting these things isn’t necessarily a waste. This could be important information later. However, the limited scope of the study (measuring brain activity specifically while using large language models) makes me question the motive just a bit given the current climate around LLMs and “AI” in general.

At bare minimum, the limited scope tees up sensational nonsense and that has been annoying. Case in point: viral posts/threads about how AI is causing brain damage.

Panic for Clicks and Clout

Back to Alex Vacca’s thread. It kicks off with “BREAKING: MIT just completed the first brain scan study of ChatGPT users & the results are terrifying” and it ends with a pitch for the guy’s business. Can it possibly be more transparent?

The thread immediately claims AI isn’t helping with productivity, it’s “cognitively bankrupting us.” What the hell does that even mean? He (obviously) never tells us, but it sure does sound scary!

Next, we’re told that 83.3% of ChatGPT users couldn’t quote from essays they’d generated/written just minutes before. In other words, if you let the tool do the thinking, you don’t remember as much. But how!?

That’s not “AI brain damage.” That’s just not doing something and thus not remembering it.

Then comes the claim that “brain scans revealed the damage,” with neural connections “collapsing” from 79 to 42—a supposedly 47% reduction in brain connectivity. This is why I question the limited scope of the aforementioned study in the context of the current moment; if it had been deliberately egging on sensational content, it wouldn’t have to look any different.

Alex wants you to picture your brain literally short-circuiting, but the reality is that the study tracked temporary changes in EEG connectivity. That’s similar to what a fitness tracker does when it says your activity is down because you sat on the couch all day.

He also claims that teachers “felt something was wrong” with the AI-generated essays, describing them as “soulless” and “empty.” This is just a new spin on the “machines have no soul” cliché. Maybe the essays do feel generic! If you don’t put anything of yourself into your writing, it will show. That’s not evidence of “cognitive bankruptcy,” it’s just bland content.

As I said in a previous essay, untouched AI outputs are simply aggregates of a certain level of an understanding of prominent finished works. That’s the exact same thing all the bland content you’ve always hated is.

Alex continues: when ChatGPT users were forced to write without AI, they performed worse than people who never used it. This is framed as “cognitive atrophy,” like a muscle wasting away. But if you rely on GPS for years and suddenly have to navigate a new area without it, you’re going to get lost. That’s not atrophy, it’s just getting used to using a tool.

Finally, the dramatic finish: “measurable brain damage from AI overuse.” This is pure nonsense. EEG shows electrical patterns in the brain, not cell death or physical disease. Interpreting lower brainwave activity as “damage” is like saying sitting lowers your heart rate, so chairs are causing cardiac collapse.

Why does this stuff get so much attention? Because “AI is melting your brain” satisfies a certain fear that a large number of temporarily embarrassed millionaire IP landlords (often leftists via platonic elitism, but I doubt CEO Alex is looking to attract that exact crowd) really want content about right now. It sounds urgent, flatters the reader for being savvy, and it conveniently sets up the author’s sales pitch about “strategic” use at the end. It offers a simple villain and zero nuance: “AI bad,” “panic now,” and then a little #grindset at the end for good measure.

Conclusion

The truth isn’t that using a tool means you’re failing to do the work. It’s that the work itself evolves as tools evolve.

When calculators became standard, nobody stopped doing math; they just stopped spending brainpower on long division and started focusing on more complex (relational) problems.

When writing moved from pen and paper to word processors, we didn’t become illiterate! We wrote faster, revised more, and published more widely.

The panic around “AI causing brain damage” isn’t genuinely about a loss of ability. It’s nostalgia and/or the fetishizing of struggle. Of course, if you rely on a tool for everything, you’ll get rusty at whatever that tool automates. But that’s not the end of intelligence or creativity. It just means the bar is moving, as it always does.

Don’t buy doomer hype. Not just about this, either! It’s not over and we’re not cooked.

But with AI and automation: yes, be thoughtful about what you’re outsourcing, but recognize that technology changes the meaning of “doing the work”—and often makes it possible to do entirely new kinds of work.

Your brain is not damaged if you use AI. If anything, it’s adapting—just like it always has.

I love the phrase "temporarily embarrassed IP landlords" for that contingent of vocal generative-AI haters who should probably be more concerned with worker protections and universal income than with giving Disney even MORE legal tools with which to go after small creators like themselves. Great, no-nonsense summary of the terrible reporting this study has been getting.

People's brains are already rotten and AI couldn't possibly disimprove the situation any further. Hear hear.